Codetown

Codetown ::: a software developer's community

What's the difference between Grid computing and Cloud Computing

I don't clearly catch the difference betwenn these two concept. Someone told me that the essential différence is that the cloud computing give you a large space of storage and the grig give more advantages than storage, we can profit to much power with this last.

Does any one know more clearly these two concept; and tell us?

Tags:

Replies to This Discussion

-

Permalink Reply by Thomas Michaud on October 26, 2011 at 3:38pm

-

I don't claim to be the expert, but the difference is (I think) in use.

Grid represents a scalable framework. You write your algorithm and your code and use as much computing power as you wallet can afford. (Useful as some work can be highly parallelizable) .

Cloud computing offers storage (true) but it's also represents the applications as well. Ideally with cloud computing, you don't need to have certain applications on your desktop - as long as you can hit the cloud, you can get, update, and use your data.

-

-

Permalink Reply by Hervé-greg MOKWABO on October 26, 2011 at 3:49pm

-

Thanks thomas;

What I got :

Grid - much computing power and can be highly parallelizable

Cloud - Storage and dont need to have certain applications on your desktop ( that's just like server application?)

Someone can tell us more?

-

-

Permalink Reply by Bradlee Sargent on October 27, 2011 at 10:58pm

-

I think if you look at the history, you will understand some difference.

In my own experience, the grid began with Oracle using it as a type of metadatabase, which would point to multiple databases residing on different but uniform hardware systems. So if a company had multiple unix boxes and needed to increase the size of their database, instead of purchasing additional hardware they could implement the grid database and combine their multiple unix servers into one database resource.

Cloud is much more in terms of it offering not only a database, but also an entire server including the operating system.

The cloud exposes an operating system, whereas a grid exposes a database.

But I am no buzz word expert so I might be wrong.

-

-

Permalink Reply by Jackie Gleason on October 28, 2011 at 10:17am

-

I just talked to a buddy about this, essentially the Oracle Grid product is differant because it runs the DB in memory. So access times are a lot quicker. I don't think it is really a matter of Vs. so much as Grid computing is a way to handle db transactions in a faster way.

He said their grid servers had something like 72gbs of ram. Freaking crazy

-

-

Permalink Reply by Hervé-greg MOKWABO on November 29, 2011 at 10:33am

-

Please Bradley, wha do you think about Jackie's reaction?

-

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

Presentation: How to Unlock Insights and Enable Discovery Within Petabytes of Autonomous Driving Data

Kyra Mozley discusses the evolution of autonomous vehicle perception, moving beyond expensive manual labeling to an embedding-first architecture. She explains how to leverage foundation models like CLIP and SAM for auto-labeling, RAG-inspired search, and few-shot adapters. This talk provides engineering leaders a blueprint for building modular, scalable vision systems that thrive on edge cases.

By Kyra MozleyArticle Series - AI Assisted Development: Real World Patterns, Pitfalls, and Production Readiness

In this series, we examine what happens after the proof of concept and how AI becomes part of the software delivery pipeline. As AI transitions from proof of concept to production, teams are discovering that the challenge extends beyond model performance to include architecture, process, and accountability. This transition is redefining what constitutes good software engineering.

By Arthur CasalsHow CyberArk Protects AI Agents with Instruction Detectors and History-Aware Validation

To prevent agents from obeying malicious instructions hidden in external data, all text entering an agent's context must be treated as untrusted, says Niv Rabin, principal software architect at AI-security firm CyberArk. His team developed an approach based on instruction detection and history-aware validation to protect against both malicious input data and context-history poisoning.

By Sergio De SimoneAnthropic announces Claude CoWork

Introducing Claude Cowork: Anthropic's groundbreaking AI agent revolutionizing file management on macOS. With advanced automation capabilities, it enhances document processing, organizes files, and executes multi-step workflows. Users must be cautious of backup needs due to recent issues. Explore its potential for efficient office solutions while ensuring data integrity.

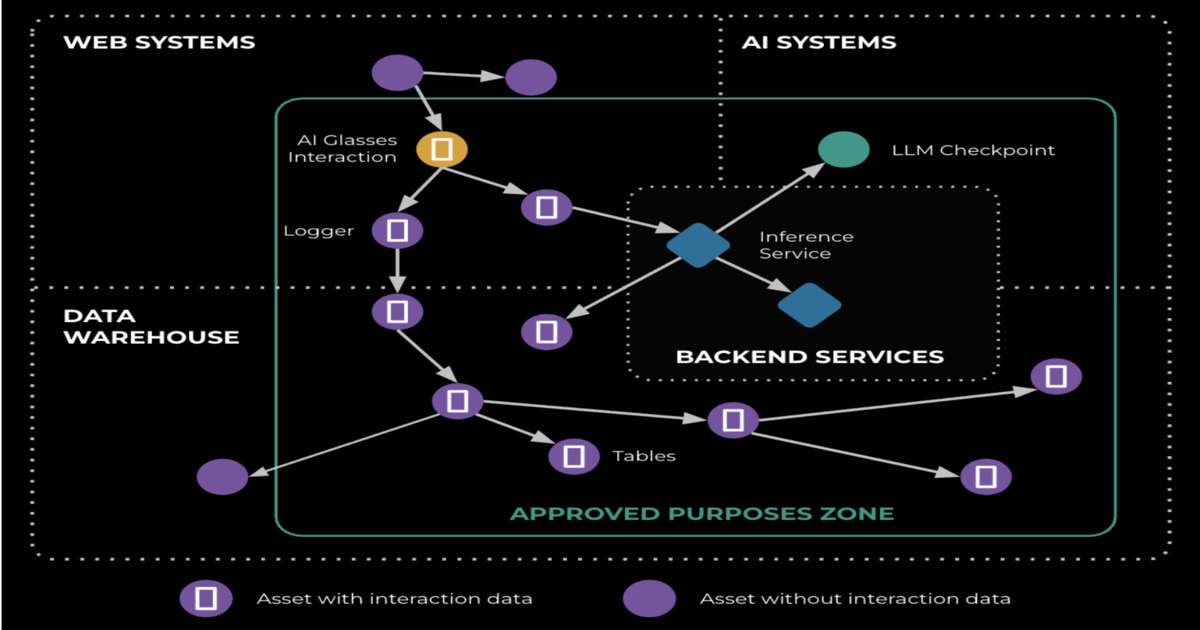

By Andrew HoblitzellTracking and Controlling Data Flows at Scale in GenAI: Meta’s Privacy-Aware Infrastructure

Meta has revealed how it scales its Privacy-Aware Infrastructure (PAI) to support generative AI development while enforcing privacy across complex data flows. Using large-scale lineage tracking, PrivacyLib instrumentation, and runtime policy controls, the system enables consistent privacy enforcement for AI workloads like Meta AI glasses without introducing manual bottlenecks.

By Leela Kumili

© 2026 Created by Michael Levin.

Powered by

![]()