Codetown

Codetown ::: a software developer's community

JavaFX 2.2 Canvas

One of the cool new features of the JavaFX 2.2 developer preview release is a new Canvas node that allows you to do free drawing within an area on the JavaFX scene similar to the HTML 5 Canvas. You can download this release for Windows, Mac, and Linux from JavaFX Developer Preview.

Being adventurous, I decided to take the JavaFX Canvas for a spin around the block. In doing some searching for cool HTML 5 Canvas examples, I came across Dirk Weber's blog comparing performance of HTML5 Canvas, SVG and Adobe Flash,An experiment: Canvas vs. SVG vs. Flash. This looked interesting for a Canvas beginner as I am, so I decided to copy his implementation and see how it runs in JavaFX.

This turned out to be pretty straight forward. Dirk's original JavaScript application for the HTML 5 Canvas contained a spirograph drawn at the top of the screen with 4 sliders beneath it for changing the number of rotations and particles and the inner and outer radius for the spirograph. Also, at the top is a text display showing the frames-per-second after the image is drawn. By manipulating the slider properties, the spirograph is drawn differently and each time the performance is shown in frames per second.

To do the same thing in JavaFX, I first created a JavaFX Application class, with a Stage and Scene and placed the Canvas at the top of the scene with 4 sliders below it followed by a Label to report the frames per second as defined in Dirk's original JavaScript implementation. One change I made to Dirk's implementation was instead of using Arrays of doubles for points, I used the JavaFX Point2D class.

My original goal was just to become familiar with the JavaFX Canvas object, but as I played around I noticed something about the performance. When I ran Dirk's HTML 5 and Flash version I would get a consistent frame-per-second rate of 50-70 fps when I adjusted the sliders (Mac OS X 10.7.4, 2.6 GHz Intel Core 2 Duo, 4 GB ram). However, when I ran my JavaFX version, the first time after starting, it drew the spirograph in the low 40s fps. But I noticed that when I adjusted the sliders, the performance got better. First adjustment, low 80s fps; fifth adjustment, mid 120s; a few more and I was getting 1000 fps, and eventually Infinity fps. I didn't believe the Infinity reading, so I debugged to the code, only to find out that it took less than a millisecond to calculate and draw the spirograph.

I assume that this behavior reflects the Hotspot compiler kicking in after a few iterations of the Spirograph calculation. But, it sure is fast.

The JavaFX source can be downloaded from here:

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

New Research Reassesses the Value of AGENTS.md Files for AI Coding

Despite widespread industry recommendations, a new ETH Zurich paper concludes that AGENTS.md files may often hinder AI coding agents. The researchers recommend omitting LLM-generated context files entirely and limiting human-written instructions to non-inferable details, such as highly specific tooling or custom build commands.

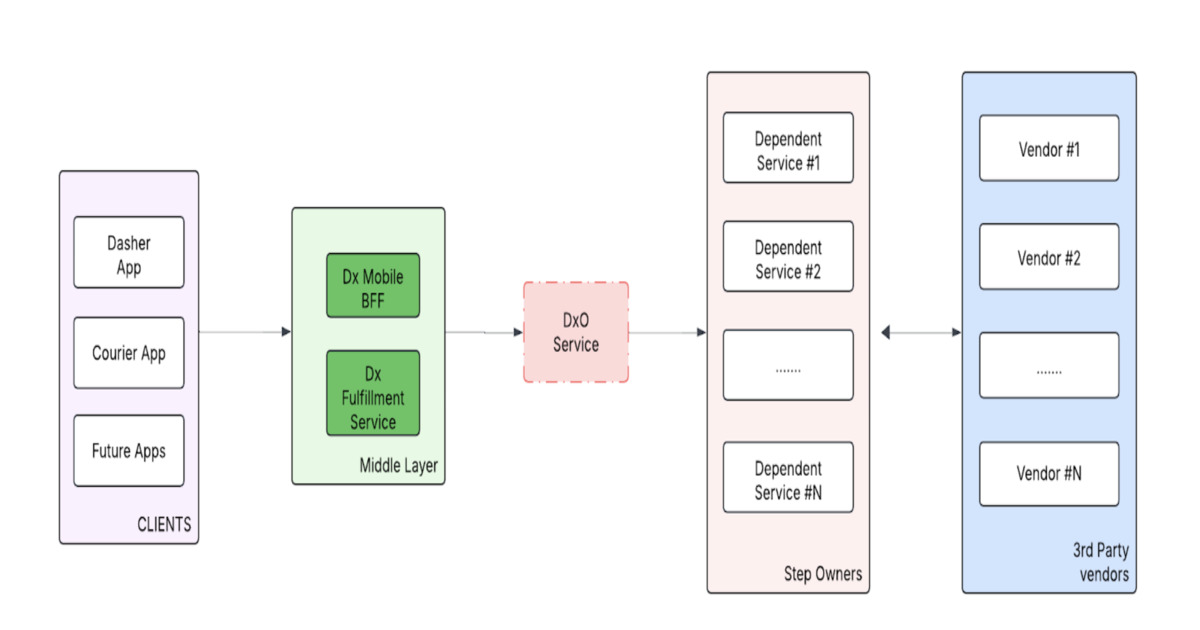

By Bruno CouriolArchitecting for Global Scale: Inside DoorDash’s Unified, Composable Dasher Onboarding Platform

DoorDash has rebuilt its Dasher onboarding into a unified, modular platform to support global expansion. The new architecture uses reusable step modules, a centralized status map, and workflow orchestration to ensure consistent, localized onboarding experiences. This design reduces complexity, supports market-specific variations, and enables faster rollout to new countries.

By Leela KumiliCNCF Graduates Dragonfly, Marking Major Milestone for Cloud-Native Image Distribution

The Cloud Native Computing Foundation (CNCF) announced recently that Dragonfly, its open source image and file distribution system, has reached graduated status, the highest maturity level within the CNCF project lifecycle.

By Craig RisiOpenAI Secures AWS Distribution for Frontier Platform in $110B Multi-Cloud Deal

OpenAI's $110B funding includes AWS as the exclusive third-party distributor for the Frontier agent platform, introducing an architectural split: Azure retains stateless API exclusivity; AWS gains stateful runtime environments via Bedrock. Deal expands the existing $38B AWS agreement by $100B and commits 2GW of Trainium capacity.

By Steef-Jan WiggersPresentation: So You’ve Decided To Do a Technical Migration

Sophie Koonin discusses the realities of large-scale technical migrations, using Monzo’s shift to TypeScript as a roadmap. She explains how to handle "bends in the road," from documentation and tooling to setting measurable milestones. Sophie shares vital lessons on balancing technical debt with feature work and provides a framework for deciding if a migration is truly worth the effort.

By Sophie Koonin

© 2026 Created by Michael Levin.

Powered by

![]()

You need to be a member of Codetown to add comments!

Join Codetown