Codetown

Codetown ::: a software developer's community

Kotlin Thursdays - Introduction to Functional Programming Part 2

Resources

- Higher-Order Functions and Lambdas:https://kotlinlang.org/docs/reference/lambdas.html

- FP in Kotlin Part 1: https://medium.com/kotlin-thursdays/functional-programming-in-kotli...

Introduction

Last week, we went over higher order functions in Kotlin. We learned how higher order functions can accept functions as parameters and are also able to return functions. This week, we will take a look at lambdas. Lambdas are another type of function and they are very popular in the functional programming world.

Logic & Data

Computer programs are made up of two parts: logic and data. Usually, logic is described in functions and data is passed to those functions. The functions do things with the data, and return a result. When we write a function we would typically create a named function. As we saw last week, this is a typical named function:

fun hello(name: String): String {

return "Hello, $name"

}

Then you can call this function:

fun main() {

println(hello("Matt"))

}

Which gives us the result:

Hello, Matt

Functions as Data

There is a concept in the functional programming world where functions are treated as data. Lambdas (functions as data) can do the same thing as named functions, but with lambdas, the content of a given function can be passed directly into other functions. A lambda can also be assigned to a variable as though it were just a value.

Lambda Syntax

Lambdas are similar to named functions but lambdas do not have a name and the lambda syntax looks a little different. Whereas a function in Kotlin would look like this:

fun hello() {

return "Hello World"

}

The lambda expression would look like this:

{ "Hello World" }

Here is an example with a parameter:

fun(name: String) {

return "Hello, ${name}"

}

The lambda version:

{ name: String -> "Hello, $name" }

You can call the lambda by passing the parameter to it in parentheses after the last curly brace:

{ name: String -> "Hello, $name" }("Matt")

It’s also possible to assign a lambda to a variable:

val hello = { name: String -> "Hello, $name" }

You can then call the variable the lambda has been assigned to, just as if it was a named function:

hello("Matt")

Lambdas provide us with a convenient way to pass logic into other functions without having to define that logic in a named function. This is very useful when processing lists or arrays of data. We’ll take a look at processing lists with lambdas in the next post!

Tags:

Replies to This Discussion

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

New Research Reassesses the Value of AGENTS.md Files for AI Coding

Despite widespread industry recommendations, a new ETH Zurich paper concludes that AGENTS.md files may often hinder AI coding agents. The researchers recommend omitting LLM-generated context files entirely and limiting human-written instructions to non-inferable details, such as highly specific tooling or custom build commands.

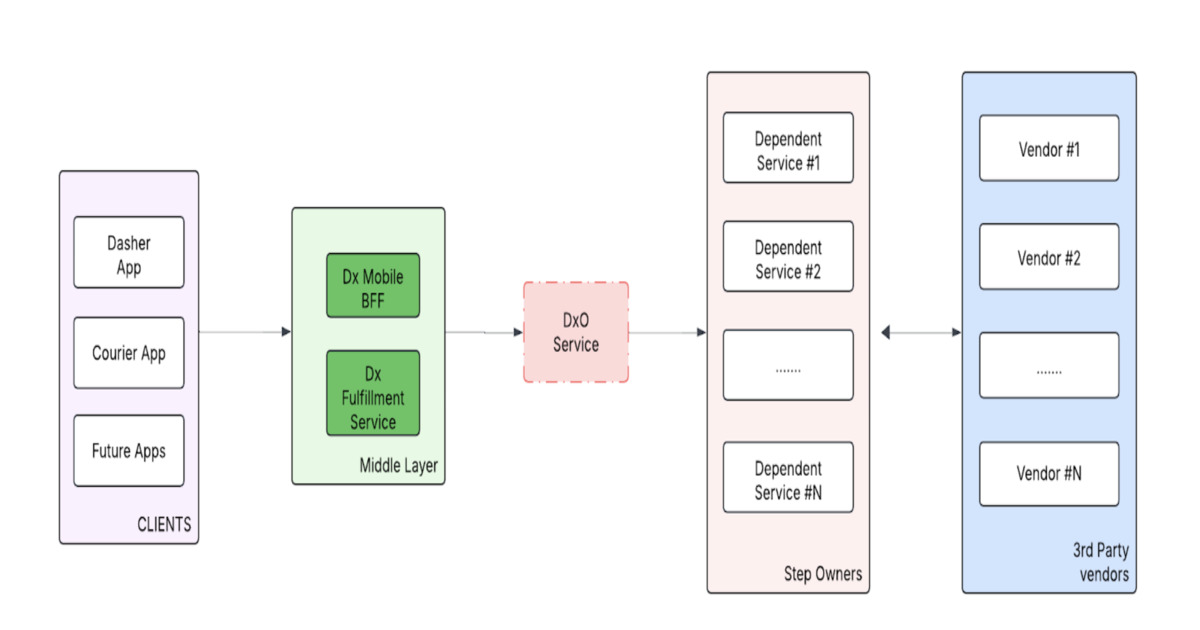

By Bruno CouriolArchitecting for Global Scale: Inside DoorDash’s Unified, Composable Dasher Onboarding Platform

DoorDash has rebuilt its Dasher onboarding into a unified, modular platform to support global expansion. The new architecture uses reusable step modules, a centralized status map, and workflow orchestration to ensure consistent, localized onboarding experiences. This design reduces complexity, supports market-specific variations, and enables faster rollout to new countries.

By Leela KumiliCNCF Graduates Dragonfly, Marking Major Milestone for Cloud-Native Image Distribution

The Cloud Native Computing Foundation (CNCF) announced recently that Dragonfly, its open source image and file distribution system, has reached graduated status, the highest maturity level within the CNCF project lifecycle.

By Craig RisiOpenAI Secures AWS Distribution for Frontier Platform in $110B Multi-Cloud Deal

OpenAI's $110B funding includes AWS as the exclusive third-party distributor for the Frontier agent platform, introducing an architectural split: Azure retains stateless API exclusivity; AWS gains stateful runtime environments via Bedrock. Deal expands the existing $38B AWS agreement by $100B and commits 2GW of Trainium capacity.

By Steef-Jan WiggersPresentation: So You’ve Decided To Do a Technical Migration

Sophie Koonin discusses the realities of large-scale technical migrations, using Monzo’s shift to TypeScript as a roadmap. She explains how to handle "bends in the road," from documentation and tooling to setting measurable milestones. Sophie shares vital lessons on balancing technical debt with feature work and provides a framework for deciding if a migration is truly worth the effort.

By Sophie Koonin

© 2026 Created by Michael Levin.

Powered by

![]()