Codetown

Codetown ::: a software developer's community

Coroutine-First Android Architecture w/ Rick Busarow

Chicago Kotlin User Group x Android Listeners

Hosted at GrubHub, July 17

Coroutines are the new hot stuff, and right now they’re being added to lots of libraries. But what if you don’t want to use an alpha01 in production code? What can coroutines do on their own, right now? In this talk, we’ll discuss the power behind structured concurrency and how we can use it to make our entire stack lifecycle-aware. We’ll look at examples of how to turn any callback or long-running code into a coroutine, and we’ll go over when and how to use Channels to handle hot streams of data without leaking. Finally, and most importantly, we’ll see how we can use these tools to inform our application architecture, so that we can quickly write maintainable and testable features. Thanks to GrubHub for hosting!

Tags:

Replies to This Discussion

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

Presentation: What I Wish I Knew When I Started with Green IT

Ludi Akue discusses how the tech sector’s rising emissions impact our global climate goals. Drawing from her experience as a CTO, she explains seven key lessons for implementing Green IT. She shares insights on LCA assessments, the paradox of microservices, and why FinOps doesn’t always equal green.

By Ludi AkueVue Router 5: File-Based Routing Into Core with No Breaking Changes

Vue Router 5.0 has integrated unplugin-vue-router into its core, enhancing file-based routing and TypeScript support. This transition release boasts no breaking changes, simplifies dependencies, and introduces experimental features like data loaders and improved editor tooling. Ideal for Vue.js developers, it positions itself as a bridge to the upcoming ESM-only version 6.

By Daniel CurtisPodcast: [Video Podcast] AI Autonomy Is Redefining Architecture: Boundaries Now Matter Most

This conversation explores why generative AI is not just another automation layer but a shift into autonomy. The key idea is that we cannot retrofit AI into old procedural workflows and expect it to behave. Once autonomy is introduced, systems will drift, show emergent behaviour, and act in ways we did not explicitly script.

By Jesper LowgrenGoogle Launches Automated Review Feature in Gemini CLI Conductor

Google has enhanced its Gemini CLI extension, Conductor, by adding support for automated reviews. The company says this update allows Conductor "to go beyond just planning and execution into validation", enabling it to check AI-generated code for quality and adherence to guidelines, strengthening confidence, safety, and control in AI-assisted development workflows.

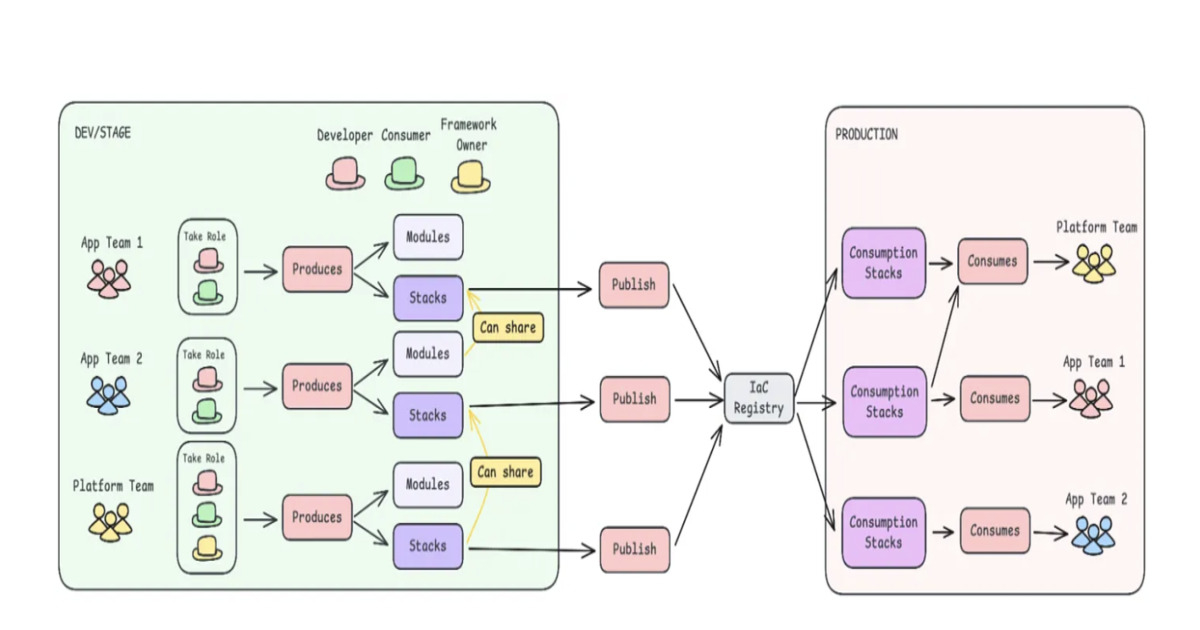

By Sergio De SimoneFrom Central Control to Team Autonomy: Rethinking Infrastructure Delivery

Adidas engineers describe shifting from a centralized Infrastructure-as-Code model to a decentralized one. Five teams autonomously deployed over 81 new infrastructure stacks in two months, using layered IaC modules, automated pipelines, and shared frameworks. The redesign illustrates how to scale infrastructure delivery while maintaining governance at scale.

By Leela Kumili

© 2026 Created by Michael Levin.

Powered by

![]()