Codetown

Codetown ::: a software developer's community

Developing Rich Internet Applications (RIA’s) by combining Flex with Turbogears.

"Flex is a (mostly) open source IDE from Adobe that uses seamlessly combines XML layout definitions with ActionScript programming to create Flash applications. A fairly robust application can be built using the xml layout definitions with minimal ActionScript programming. Flex applications support a wide variety of server-side API’s, including XML and JSON.

Turbogears is an open source web framework written in Python that is similar to Ruby on Rails. Turbogears supports all the major RDBMS’s and uses either SqlAlchemy or SqlObject to provide Object-Relationship-Mapping (ORM) to simplify server side coding. A basic web application can be implemented in just two files: a database model and a controller containing the business logic. Although Turbogears is primarily used with any one of several HTML templating engines, it also supports JSON."

This presentation will focus on rapid development of RIA’s using Flex on the client side with static XML files to simulate server-side responses, then migrate to JSON with a Turbogears backend."

BIO

Fred Sells is employed at Adventist Care Centers where he develops web applications in Python and Java. He has been programming in Python since 1990 and Java since 2000. Prior to this, he was founder and President of Sunrise Software International which developed ezX® a GUI-builder for the Unix environment. Fred has also consulted to The New York Stock Exchange and developed command and control software for the U.S. Navy. He is a graduate of Purdue University and currently working on an MS in Computer Information Science from Boston University.

Tags:

Replies to This Discussion

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

Presentation: Beyond the Warehouse: Why BigQuery Alone Won’t Solve Your Data Problems

Sarah Usher discusses the architectural "breaking point" where warehouses like BigQuery struggle with latency and cost. She explains the necessity of a conceptual data lifecycle (Raw, Curated, Use Case) to regain control over lineage and innovation. She shares practical strategies to design a single source of truth that empowers both ML teams and analytics without bottlenecking scale.

By Sarah UsherJava Explores Carrier Classes to Extend Data-Oriented Programming Beyond Records

The OpenJDK Amber project has published a new design note proposing “carrier classes” and “carrier interfaces” to extend record-style data modeling to more Java types. The proposal preserves concise state descriptions, derived methods, and pattern matching, while relaxing structural constraints that limit records.

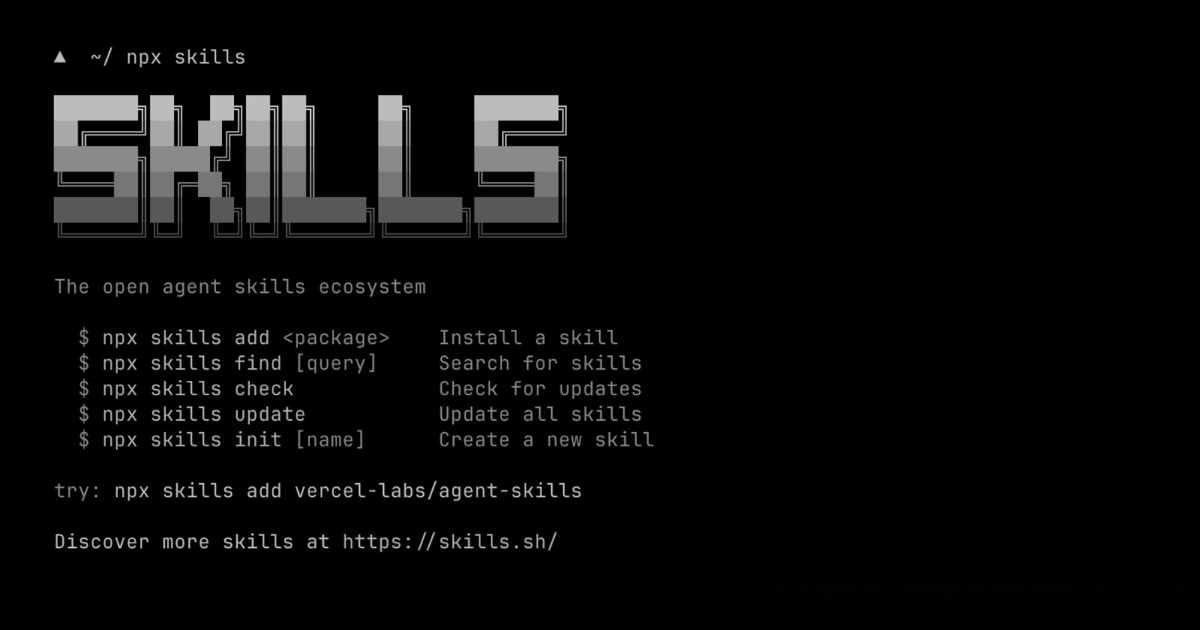

By A N M Bazlur RahmanVercel Introduces Skills.sh, an Open Ecosystem for Agent Commands

Vercel has released Skills.sh, an open-source tool designed to provide AI agents with a standardized way to execute reusable actions, or skills, through the command line.

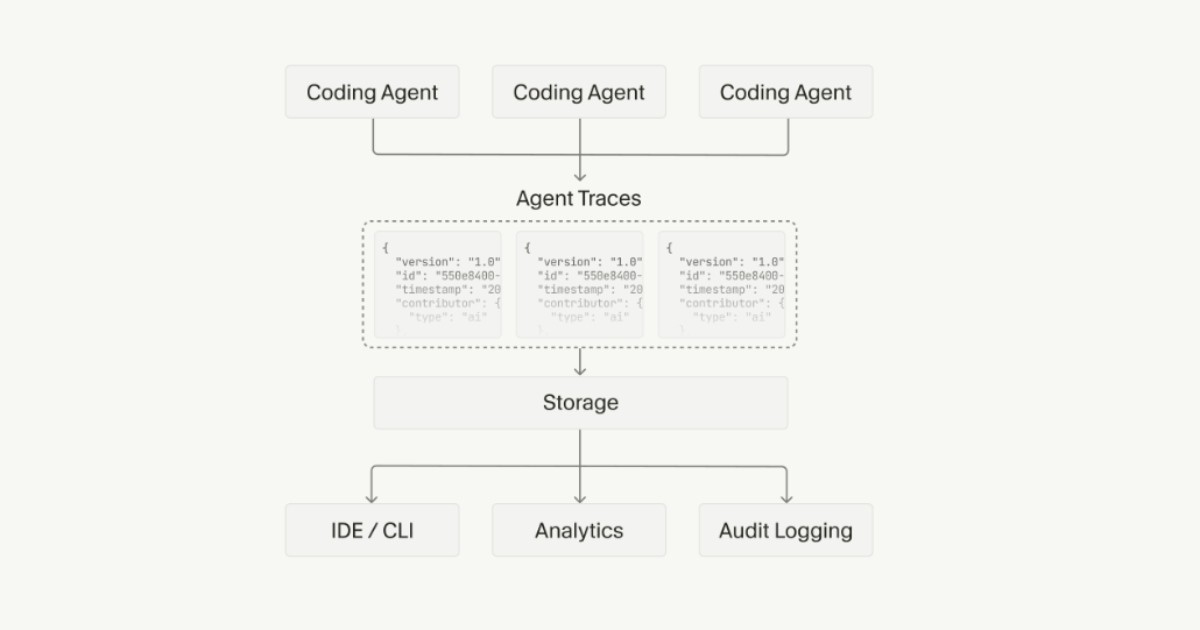

By Daniel DominguezAgent Trace: Cursor Proposes an Open Specification for AI Code Attribution

Cursor has published Agent Trace, a draft open specification aimed at standardizing how AI-generated code is attributed in software projects. Released as a Request for Comments (RFC), the proposal defines a vendor-neutral format for recording AI contributions alongside human authorship in version-controlled codebases.

By Robert KrzaczyńskiArticle: From Alert Fatigue to Agent-Assisted Intelligent Observability

As systems grow, observability becomes harder to maintain and incidents harder to diagnose. Agentic observability layers AI on existing tools, starting in read-only mode to detect anomalies and summarize issues. Over time, agents add context, correlate signals, and automate low-risk tasks. This approach frees engineers to focus on analysis and judgment.

By Rohit Dhawan

© 2026 Created by Michael Levin.

Powered by

![]()