Codetown

Codetown ::: a software developer's community

Meeting Mycroft: An Open AI Platform You Can Order Around By Voice

Mycroft developer Ryan Sipes, speaking from the show floor of this year's OSCON in Austin, Texas...

(see our video interview here), says that what started out as a weekend project to use voice input and some light AI to locate misplaced tools in a makerspace morphed into a much more ambitious, and successfully crowd-funded, project -- hosted at the Lawrence Center for Entrepreneurship in Lawrence, Kansas -- when he and his fellow developers realized that the state of speech recognition and interfaces to exploit it were in a much more rudimentary state than they initially assumed.

How ambitious? Mycroft bills itself as "an open hardware artificial intelligence platform"; the goal is to allow you to "interact with everything in your house, and interact with all your data, through your voice." That's a familiar aim of late, but mostly from a shortlist of the biggest names in technology. Apple's Siri is exclusive to (and helps sell) Apple hardware; Google's voice interface likewise sells Android phones and tablets, and helps round out Google's apps-and-interfaces-for-everything approach. Amazon and Microsoft have poured resources into voice recognition systems, too -- Amazon's Echo, running the company's Alexa voice service, is probably the most direct parallel to the Mycroft system that was on display at OSCON, in that it provides a dedicated box loaded with mics and a speaker system for 2-way voice interaction.

The Mycroft system, though, is based on two of the first names in open hardware -- Raspberry Pi and Arduino -- and it's meant to be and stay open; all of its software is released under GPL v3. The initial hardware for Mycroft includes RCA ports, as well as an ethernet jack, 4 USB ports, HDMI, and dozens of addressable LEDs that form Mycroft's "face." That HDMI output might not be immediately useful, but Sipes points out that the the hardware is powerful enough to play Netflix films, or multimedia software like Kodi, and to control them by voice. Unusually for a consumer device, even one aimed at hardware hackers, Mycroft also includes an accessible ribbon-cable port, for users who'd like to hook up a camera or some other peripheral. Two other "ports" (of a sort) might appeal to just those kind of users, too: if you pop out the plugs emblazoned with the OSI Open Hardware logo, two holes on each side of Mycroft's case facilitate adding it to a robot body or other mounting system.

The open-source difference in Mycroft isn't just in the hacker-friendly hardware. The real star of the show is the software (Despite the hardware on offer, "We're a software company," says Sipes), and that's proudly open as well. The Python-based project is drawing on, and creating, open source back-end tools, but not tied to any particular back-end for interpreting or acting on the voice input it receives. The team has open sourced several tools so far: the Adapt intent parser, text-to-speech engine Mimic (based on a fork of CMU's Flite), and open speech-to-text engine OpenSTT.

The commercial projects named above (Siri, et al) may offer various degrees of privacy or extensibility, but ultimately they all come from "large companies that work really hard to mine your data" and to keep each user in a silo, says Sipes. By contrast, "We're like Switzerland." With Mycroft the speech recognition and speech synthesis tools are swappable, and there's an active dev community adding new voice-activated capabilities ("skills") to the system.

And if you can program Python, your idea could be next.

Notes

Welcome to Codetown!

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Codetown is a social network. It's got blogs, forums, groups, personal pages and more! You might think of Codetown as a funky camper van with lots of compartments for your stuff and a great multimedia system, too! Best of all, Codetown has room for all of your friends.

Created by Michael Levin Dec 18, 2008 at 6:56pm. Last updated by Michael Levin May 4, 2018.

Looking for Jobs or Staff?

Check out the Codetown Jobs group.

InfoQ Reading List

Vercel Releases React Best Practices Skill with 40+ Performance Rules for AI Agents

Vercel has launched "react-best-practices," an open-source repository featuring 40+ performance optimization rules for React and Next.js apps. Tailored for AI coding agents yet valuable for developers, it categorizes rules based on impact, assisting in enhancing performance, bundle size, and architectural decisions.

By Daniel CurtisKubernetes Introduces Node Readiness Controller to Improve Pod Scheduling Reliability

The Kubernetes project recently announced a new core controller called the Node Readiness Controller, designed to enhance scheduling reliability and cluster health by making the API server’s view of node readiness more accurate.

By Craig RisiPresentation: Platforms for Secure API Connectivity With Architecture as Code

Jim Gough discusses the transition from accidental architect to API program leader, explaining how to manage the complexity of secure API connectivity. He shares the Common Architecture Language Model (CALM), a framework designed to bridge the developer-security gap. By leveraging architecture patterns, engineering leaders can move from six-month review cycles to two-hour automated deployments.

By Jim GoughMicrosoft Open Sources Evals for Agent Interop Starter Kit to Benchmark Enterprise AI Agents

Microsoft's Evals for Agent Interop is an open-source starter kit that enables developers to evaluate AI agents in realistic work scenarios. It features curated scenarios, datasets, and an evaluation harness to assess agent performance across tools like email and calendars.

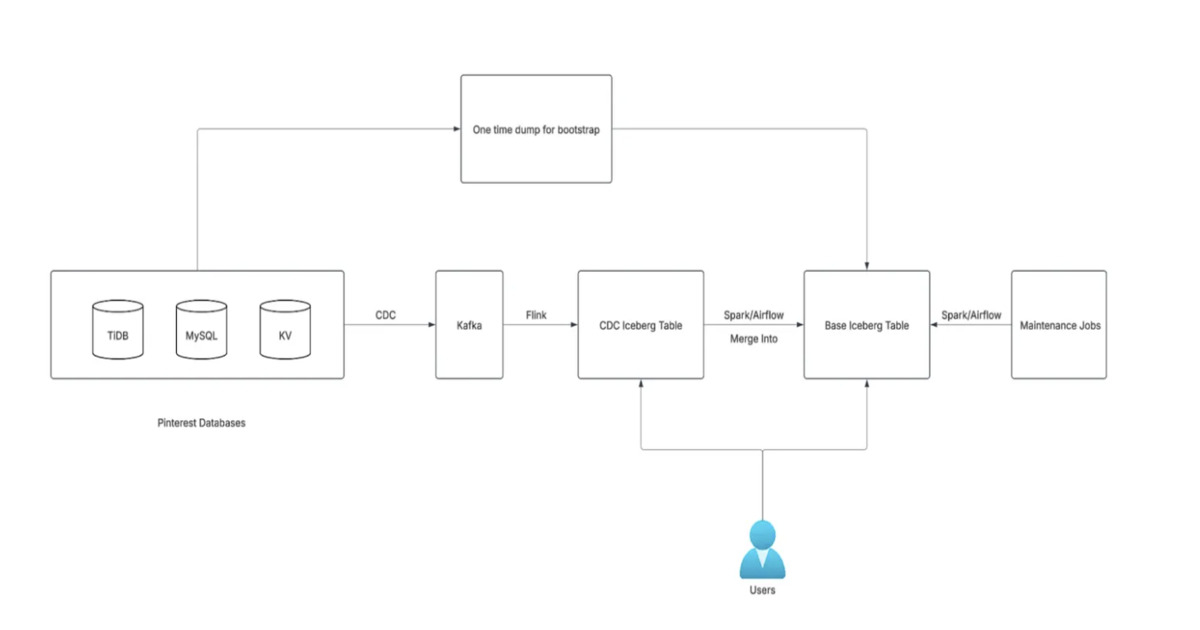

By Edin KapićPinterest’s CDC-Powered Ingestion Slashes Database Latency from 24 Hours to 15 Minutes

Pinterest launched a next-generation CDC-based database ingestion framework using Kafka, Flink, Spark, and Iceberg. The system reduces data availability latency from 24+ hours to 15 minutes, processes only changed records, supports incremental updates and deletions, and scales to petabyte-level data across thousands of pipelines, optimizing cost and efficiency.

By Leela Kumili

© 2026 Created by Michael Levin.

Powered by

![]()

You need to be a member of Codetown to add comments!

Join Codetown